- Generative AI has captivated us all–we can’t unsee it

- Implementing and governing generative AI is challenging

- Deploying generative AI requires a faster integration strategy

Generative AI has revolutionized our expectations of human-to-computer interaction spurring executives to bring it into their enterprises to improve customer and employee experiences. Generative AI tools like ChatGPT from Open AI and Bard from Google look promising but many businesses are unsure of how to bring AI inside. Constraints around legacy technology and scarce resources limit how responsive IT organizations can build and integrate generative ai applications and this worries executive management. Business leaders want to transform their business models using AI to improve processes and defend against competitive pressures.

How fast can you implement AI into your enterprise?

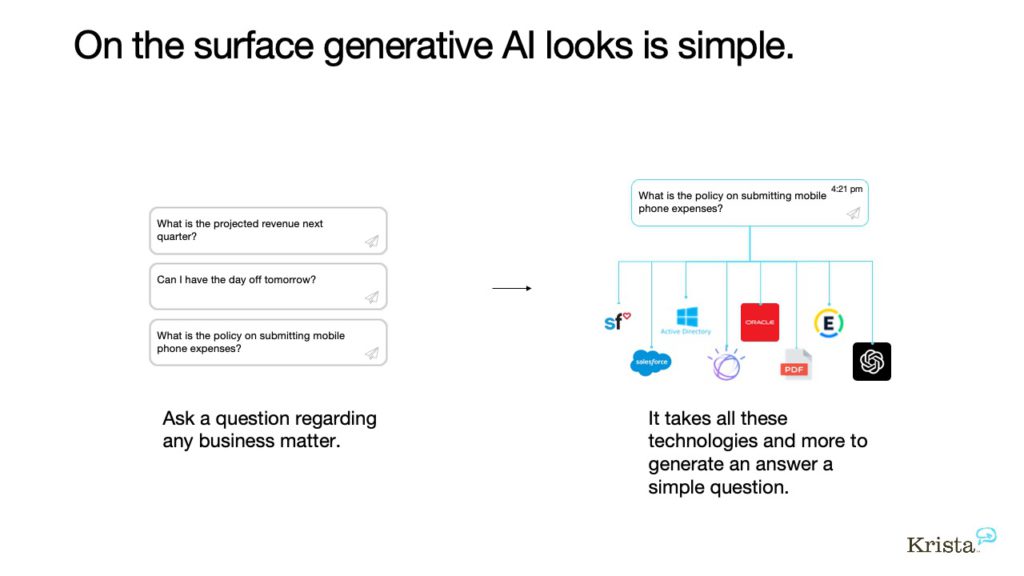

Generative AI technology like ChatGPT makes AI look easy. Ask AI a question, and it answers you. But, then what? What’s the next step in your process? Do you need to inform a team member? Do you need to input the answer into another system? Do you need another AI service to complete more steps in the process and speed things up? How do you orchestrate all of the steps together? Is the model trained on YOUR data? Is it secure? Do you trust it?

These are important questions being asked inside every enterprise. Business leaders want AI technology and they want it now.

How fast can you securely integrate generative AI using your own data?

Implementing generative AI across systems is challenging

Natural language processing (NLP) and new generative AI technologies have captivated us with their ability to automate mundane tasks, understand large amounts of raw data quickly, and provide contextually relevant answers. However, integrating, deploying, and governing generative AI technologies with your training datasets is far from easy. Without the right infrastructure and technology in place, generative AI applications are typically relegated to isolated point solutions or small pilots that lack scalability. As a result, many businesses fail to realize the full potential of these advanced AI technologies and remain stuck in inefficient processes. To truly unlock AI capabilities, enterprises need modern integration methods that can quickly integrate and govern any AI with existing systems.

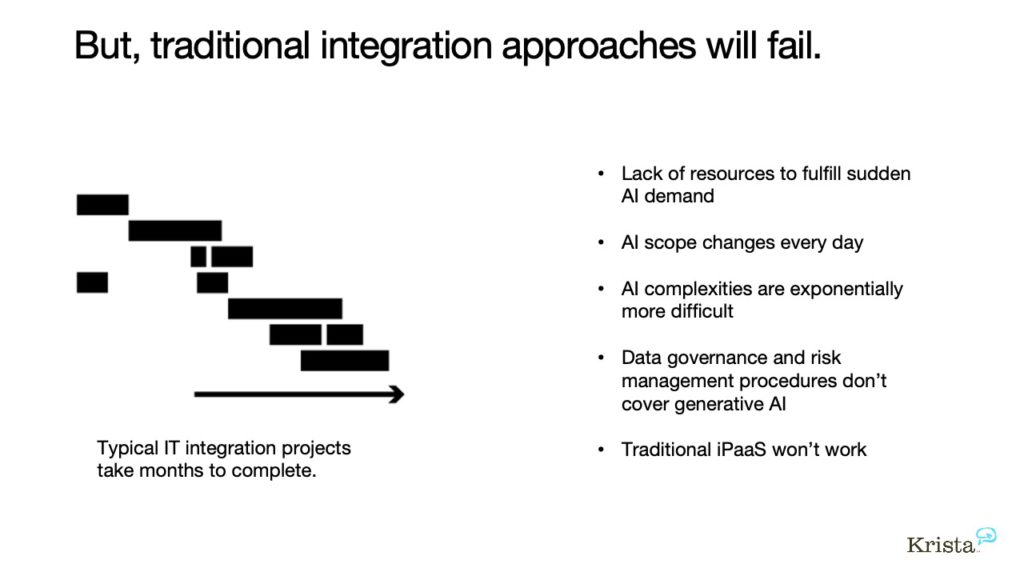

Deploying generative AI inside your enterprise quickly is a challenging endeavor. Traditional software development cycles are slow and time-consuming stifling the speed of innovation. Most IT teams are near or at capacity and can’t fulfill the sudden demand to deploy the different AI tools that the business is demanding. If there is capacity, by the time you select and deploy an AI model or service, the scope will change and there will be hundreds of new AI alternatives to choose from. Therefore, you need to be able to quickly deploy and interchange AI services in your workflows and decision points and most enterprise teams have too many legacy systems and data privacy contingencies to deal with to do this quickly. Additionally, traditional iPaaS solutions are helpful when integrating systems but lack the speed, flexibility, and support for integrating generative AI within the context of data governance, making it difficult to securely integrate and deploy models. It’s no wonder that so many organizations are struggling to build generative AI applications into their enterprises quickly and securely. However, with the right platform in place, it is possible to unlock the full potential of AI more quickly and securely.

Why can’t I just use ChatGPT or BARD in my enterprise?

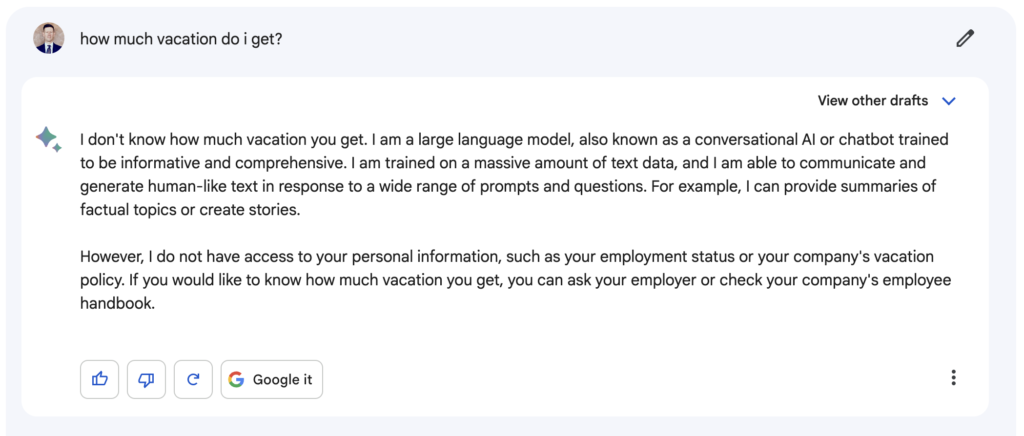

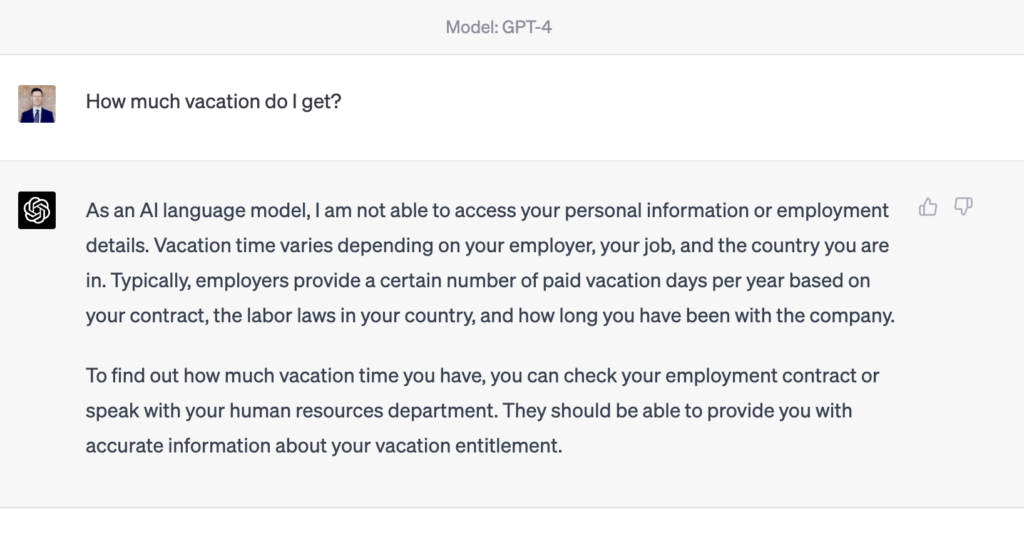

AI solutions for your business must deliver answers based on your company’s unique data. When posing questions like “How much vacation do I get?” to ChatGPT or BARD, you receive generic answers derived from internet-based training data, which might be accurate but not tailored to your employees’ needs.

Effective generative AI must combine data from company-specific knowledge, understand the requester’s context, and maintain security, as not every answer should be accessible to all employees. For instance, when inquiring about vacation days, employees may be asking about PTO, sick leave, or flex days. The AI must provide answers considering the employee’s country, state, employment type (full-time, part-time, or contractor), and other relevant factors.

Traditional chatbots or search engines typically provide PDFs or policy documents that users must sift through to find relevant information. However, an artificial intelligence integration platform as a service (AI iPaaS) solves this problem by answering more specific questions in the user’s context. It gathers information about the employee, such as location and employment type, to refine the question and generate a more accurate response for many particular use cases.

Additionally, securing sensitive data is crucial, and role-based access control should be in place when searching for information to construct answers. For example, only employees with an HR manager role or above should have access to the data generative AI uses to answer questions about a senior director’s salary. This ensures that sensitive information remains protected and that your organization keeps its data private enhancing your enterprise’s AI security.

Generative AI integration requirements

Integrating generative AI or any other AI into your enterprise requires more than just plugging it in. Enterprises need assurances that their data is secure and that role-based governance processes are in place. They must be able to interact with existing systems in real-time to provide relevant content and accurate answers to users in any format. If an answer or a conversation needs to involve other people, the AI should do so in the same context. This way everyone understands the story and works toward the same goal. There are many considerations enterprises should make when building a secure and efficient AI environment. We discuss these considerations and requirements in the following paragraphs and how to best fulfill them.

Must connect to enterprise systems in real-time

As enterprises increasingly harness AI tools to optimize workflows and boost business processes, integration of these solutions with real-time systems can prove challenging. An AI-powered iPaaS overcomes this obstacle, providing seamless connectivity between AI solutions and real-time systems. This unified platform integrates AI technologies such as OCR, Document Understanding, NLU, and NLG, orchestrating process flows and granting decision-makers real-time access to vital insights. Consequently, operational efficiency improves, and businesses can rapidly adapt to evolving market dynamics as AI products advance. The AI iPaaS simplifies the management of multiple integrations, reduces custom coding, and upholds strong security and compliance standards, ultimately fostering a more agile, data-driven, and competitive enterprise.

Generative AI workflows must address real-time data from enterprise systems, not just FAQs and static documents. Providing answers rather than pointing to a PDF holds value, but there’s much more! Imagine if the generative AI engine used your training data and had access to your orders, invoices, and purchase orders in real-time, extracting current statuses like invoice receipts, payments, purchase order end dates, remaining funds, or listed items. New capabilities like this enable customers to ask any question about these documents without requiring IT developers to pre-create logic for every possible query.

With generative AI understanding this information, users can ask questions like:

- “Do any of my POs expire in the next 6 months?”

- “Is product X on an active PO?”

- “Do I have any unpaid invoices?”

This approach hinges on generative AI’s ability to find answers within up-to-date information.

AI should “do” things (chat is not an outcome, it’s one step in a process)

Generative AI is incredibly impressive. Its ability to produce content from existing models will help organizations generate more text and content, but this is only one step in a longer process. Take, for instance, when a customer contacts an organization for help. Generative AI can help craft useful responses to this customer but is limited in its ability to find customer information, update systems or hand the same contextual conversation to a person. For generative AI to be helpful, it must “do” something or bring a situation to closure.

To truly operationalize AI, it must connect to real-time systems, interact with multiple people, and facilitate actions that lead to tangible business outcomes. This might start with a simple one-on-one conversation, but the scope is much larger, as it must accommodate the complexities and tasks that arise from such exchanges. And therein lies the crux of the matter: AI automation must be able to “do” things, not just generate content in a chat.

Know who is allowed to know what!

An AI iPaaS platform should cater to enterprise needs and must provide robust support for data and governance models to ensure the highest levels of security and compliance. This involves implementing strict role-based access control measures to prevent unauthorized personnel from accessing sensitive data. The platform should adhere to a “least privilege” principle, where individuals are granted access only to the information they need to perform their job functions effectively. For instance, you can’t have employees asking questions about other employees’ salaries or vacation privileges if you have generative AI connected to your human resources systems. By incorporating advanced user privilege and security protocols an AI integration platform should also provide a comprehensive audit trail and real-time monitoring capabilities to track data access, usage, and modifications for risk management and compliance.

Effortlessly integrate diverse AI tools

Enterprises must be able to use various third-party AI tools flexibly and ensure their interchangeability. Adopting this approach lets organizations seamlessly integrate and manage a wide range of AI solutions, enabling them to select the best tools for their unique needs without being tied to a single vendor. This agility allows businesses to stay current with the fast-paced AI landscape, capitalizing on emerging advancements and innovations. An AI integration platform simplifies incorporating third-party AI tools into new or existing workflows using “Ask AI”, which reduces complexity and fosters efficiency. Consequently, enterprises can leverage diverse AI technologies to ensure interchangeability, drive innovation, enhance processes, and maintain a competitive edge in the market.

Generative AI and NLU represent just two broad categories of AI, but you’ll want access to other capabilities for end-to-end workflows. To automate more extensive processes, you might need to determine a product’s availability for expedited shipping. Machine learning models can assess whether expedited shipping is possible today, tomorrow, or not at all. AI models can also evaluate invoices to identify normalcy or anomalies. Countless use cases could assist in decision-making or eliminate bottlenecks in processes. Your data scientists may develop customized and trained models for specific purposes like these, but many times they don’t provide valuable interfaces for business users. They are trained data scientists, not usability or process experts. You’ll want a way to orchestrate custom or third-party models into a conversational user interface to ensure you produce more value from your useful machine-learning models.

Involve many people in the same context

Enterprises need an AI integration platform to orchestrate processes across people and different platforms within the same context to ensure a comprehensive understanding of each unique situation. Enterprise processes often span different platforms and legacy systems and maintaining context across different platforms is difficult. By orchestrating processes in the same context, organizations can effectively coordinate tasks, information sharing, and decision-making among team members to simplify work. Sharing context enhances collaboration and fosters a unified approach to problem-solving. Providing team members with relevant data, insights, and contextual information in a single view empowers them to make informed decisions tailored to each specific circumstance. Ultimately, this leads to improved efficiency, better alignment among team members, and the ability to respond quickly to dynamic business environments.

Efficiently synthesize vast data volumes

Generative AI, with capabilities like text completion, summarization, and content generation, can handle small amounts of proprietary data, typically between 2,000 and 6,000 bytes, or about 3 to 4 pages of content. However, such limited data per request may prove insufficient for many enterprise use cases, requiring the input of larger datasets to obtain relevant and contextual answers from backend systems.

The primary challenge in utilizing generative AI involves distilling massive volumes of enterprise data into the minimal yet adequate amount necessary for the model to generate accurate responses. For example, attempting to find specific answers to HR-related questions, such as benefits and vacation policies, by inputting your entire HR manual into ChatGPT would result in an error due to its size.

As generative AI models advance, they may become more adept at processing larger datasets. Organizations may try to find ways around the data input limitations or compress their terabytes of information into a few pages of crucial content, allowing AI models like ChatGPT to offer valuable insights based on the specific content provided. However, these attempts are futile and will most likely fail to produce value for the amount of work.

Synthesizing terabytes of data is crucial for leveraging generative AI like ChatGPT, but it presents challenges. Enterprises require larger data inputs. A more scaleable method is to employ fuzzy logic, semantic search, and document understanding to analyze all company information and return relevant or applicable paragraphs based on the query. This approach ensures accurate answers are obtained, rather than just any answer derived from an excessive data subset fed to the generative AI model. Identifying the correct data and providing it in manageable chunks enables processing and accurate answer retrieval. Relying on generative AI to process larger amounts of data may not be feasible; instead, more scalable solutions should incorporate an AI integration platform to orchestrate this process, rather than building solutions to handle larger datasets.

Chat from desktop to mobile platforms to SMS

Generative AI and other AI tools must support conversations across the omnichannel. It is crucial to address diverse use cases from employees in the office, in the field and customers at home. By enabling seamless communication across web, mobile app, and SMS platforms, these AI tools can cater to user preferences and provide a consistent and contextual user experience in this new era. This next-generation omnichannel approach is vital because it ensures that employees and customers can engage with the AI in their preferred medium, thus enhancing internal and external customer satisfaction throughout your company. Moreover, updating systems in real-time plays a crucial role in maintaining accurate and up-to-date information across all channels. This real-time synchronization empowers enterprises to make informed decisions, deliver efficient customer support, and foster a positive brand image, ultimately leading to long-term success.

Deal with long-running conversations

Generative AI and other AI tools need to support long-running conversations, as this capability is essential for providing personalized and efficient user experiences. Long-running conversations are conversations that are spread out over multiple days, weeks, or even months, and AI can identify the context of each conversation and respond in an accurate, helpful way. To enable this type of conversation support, your AI tools and processes must be able to store previous conversations and query relevant data quickly and accurately across longer time periods.

This is where an AI integration platform will be essential. An AI integration platform logs activity to support compliance and role-based access. The same logs support long-running conversations and automations to help employees and customers on longer journeys. For instance, employee onboarding is sometimes a year-long process that includes initial data entry at the beginning of an employee’s tenure through training and then follow-ups and reviews. To facilitate these extended interactions, AI tools must recognize users and identify their positions within their individual journeys on several types of processes and workflows. This level of understanding is crucial because it enables AI systems to offer tailored assistance and insights, fostering a sense of continuity and familiarity for users. By supporting long-running conversations, AI tools can develop stronger relationships with users, leading to increased customer satisfaction, loyalty, and long-term success for businesses that implement these advanced technologies.

Avoid software development cycles

Enterprises need capabilities to build and modify workflows without lengthy software development cycles. The desired agility causes enterprises to invest in low-code and no-code tools to support dynamic business needs and conditions. According to Gartner, “By 2025, 70% of new applications developed by organizations will use low-code or no-code technologies.” This is triple the amount from just two years ago.

For enterprises to quickly react to faster cycles, it is essential to remove IT constraints and become increasingly agile to maintain a competitive edge. Adapting to changing market conditions, customer needs, and emerging trends is crucial for staying ahead of competitors and seizing new opportunities. Utilizing a low-code AI iPaaS empowers organizations to create and adjust AI-led workflows to respond more effectively to internal and external challenges, optimize business processes, and enhance overall efficiency. This streamlined approach reduces the time and resources spent on traditional software development cycles, allowing businesses to allocate these valuable resources toward innovation and growth.

What is an AI iPaaS?

An AI Platform as a Service (AI iPaaS) is a cloud-based service that provides organizations with artificial intelligence (AI) and machine learning (ML) tools, resources, and infrastructure needed to build, deploy, and manage AI-powered applications and solutions. AI iPaaS typically includes pre-built models, APIs, and development frameworks that allow enterprises to easily integrate AI capabilities into their existing systems or create new applications. Not only does an AI iPaaS enable easy integration of AI tools, it provides a low-code or no-code configurator for non-technical business users to build both

The benefits of using AI iPaaS include:

- Scalability: As a cloud-based service, an AI iPaaS can easily scale to accommodate increased demand or workloads.

- Cost-effectiveness: Organizations can access powerful AI and ML resources without having to invest in expensive hardware and infrastructure.

- Flexibility: AI iPaaS offers a wide range of tools and services, making it suitable for various industries and applications.

- Simplified management: Users can manage their AI applications and resources through a centralized platform, making it easier to monitor performance and make adjustments as needed.

- Faster time-to-market: By leveraging pre-built models and low-code tools, organizations can speed up the development process and bring their AI solutions to market more quickly.

Krista is an AI iPaaS

Krista is a revolutionary AI integration platform as a service (AI iPaaS) designed to easily bring any AI into your enterprise. Krista is an innovative platform enabling you to easily integrate any AI into your systems and processes to help your people get more done. Krista utilizes a low-code suite of tools enabling you to integrate AI into processes spanning your people, systems of record, data stores, messaging systems, and omnichannel. With Krista, implementing AI into your enterprise systems and workflows can be done with less time and effort than ever before via hundreds of available connectors. Krista’s NLP-enabled AI iPaaS provides natural language capabilities to integrate AI into your existing systems without the need for expensive infrastructure or software development resources. Krista is the perfect fit for unlocking the potential of AI in your enterprise.

Krista integrates any AI into your business

Krista’s AI iPaaS integrates and deploys third-party AI technologies and machine learning with your existing systems. Krista’s no-code AI iPaaS enables you to easily implement any AI into your business without manual coding, enabling your business to realize quick time to value. Krista provides hundreds of AI and API connectors to help you quickly deploy generative AI into a process or a department. Krista’s conversational AI interface provides role-based security access and event logging to help you govern data and create automated compliance processes. With these capabilities in place, you can finally take advantage of the full potential of natural language processing and generative AI without having to worry about integration complexities or scalability. Krista is the ideal AI platform for enterprises that want to ensure their data remains secure, use AI technologies effectively and efficiently, and stay competitive in today’s digital economy.

How Krista deploys generative AI in your enterprise

Krista provides a powerful and easy-to-use platform that enables enterprises to quickly deploy generative AI into their existing systems. Krista provides generative AI and ChatGPT functionality to generate text and content from your enterprise applications. This allows businesses to build and deploy proprietary chat solutions that leverage the power of ChatGPT for natural, conversational interactions. With Krista’s AI iPaaS, enterprises can also integrate other popular AI technologies that could provide decision support or automation. Through Krista’s platform, businesses can quickly and easily deploy any AI.

Scalable and Secure

Krista’s AI iPaaS provides a secure and scalable platform for deploying AI solutions. With Krista, businesses can easily integrate their existing systems with any AI technologies to quickly answer queries, automate processes, and gain insights from their data. Krista offers advanced governance capabilities such as role-based security access and event logging, so organizations can feel confident that their data is secure and compliant. Krista’s scalability also allows organizations to quickly deploy AI processes across multiple departments, ensuring that the platform can scale with their business.

Cost-effective

Krista’s AI iPaaS provides a cost-effective solution for deploying generative AI in an enterprise. By leveraging the platform, businesses can take advantage of a low-code suite of tools to quickly and easily deploy AI into their existing systems without manual coding. Krista provides hundreds of powerful app and AI integrations and connectors enabling businesses to quickly deploy AI solutions without worrying about costly infrastructure, integration, or software development resources.

Flexible

Krista’s AI iPaaS is a highly flexible platform that allows businesses to quickly deploy, interchange, and test different AI solutions in their enterprise. With Krista, businesses can rapidly integrate third-party AI technologies into their existing workflows and easily switch between different AI models as needed. Krista also provides detailed analytics so enterprises can track the performance of their AI solutions and make informed decisions about which AI services are most effective. With Krista’s easy-to-use platform, businesses can easily deploy the latest generative AI into their enterprise and take advantage of its potential.

Simple Management

Krista provides a single platform to deploy, monitor and manage AI solutions in an enterprise. Its intuitive dashboard allows businesses to quickly identify areas of improvement and monitor performance. Krista’s AI iPaaS also offers a no-code studio for non-technically skilled business users to modify conversations and automation logic to provide greater flexibility and speed.

Faster time-to-market

Krista’s AI iPaaS enables enterprises to quickly and easily deploy AI solutions within their existing systems. With Krista, businesses can take advantage of no-code and low-code capabilities to quickly develop, test and deploy AI solutions without relying on traditional software development cycles or existing iPaaS platforms. This allows organizations to speed up time-to-market for their AI solutions and quickly capitalize on the potential benefits of AI. As a result, businesses can save time and money, and gain access to the latest generative AI technologies to stay ahead of the competition.

In Closing

Krista’s AI iPaaS is the perfect solution for unlocking the full potential of artificial intelligence and machine learning in your enterprise. With Krista, you can easily integrate generative or any other AI service into your systems and processes with low-code or no-code configurations. Krista enables you to quickly deploy scalable, cost-effective, and flexible AI solutions. Most importantly, Krista provides role-based security access for secure data management. If you’re looking for a reliable and scalable method to integrate different AI and machine learning models into your business, Krista’s AI iPaaS is a perfect choice. Contact us today to take advantage of what Krista AI integration has to offer.

Change Your Tech–Not Your People

Prev post

Change Your Tech–Not Your People

Prev post